Generative AI in software testing: best practice and technologies

The author of this article is tech expert Pieter Murphy.

In the rapidly evolving software development and testing segments, the push towards automation has increasingly brought Generative AI into the limelight.

This new method introduces fresh ideas, going beyond the limits of traditional automation. Unlike systems that just follow set steps, generative AI can create new and useful outputs on its own.

Tools like ChatGPT for automated testing have many uses in Quality Assurance (QA), so it's important for tech professionals to understand this new approach.

The impact of generative AI on software quality assurance

The Quality Assurance world has seen significant advancements and, since its inception, has transformed and adapted to meet the rapidly changing needs of the technological landscape.

This evolution has taken software professionals from scripted automation and manual testing to data-driven testing.

Technologies such as generative AI to augment software testing, powered by advanced Large Language Models (LLMs), are changing the approach to QA by outsourcing the bulk of the work that goes into testing.

This allows testers to automate the processes of test generation, error detection, and performance evaluation, consequently boosting testing efficiency, minimizing human error, and speeding up release cycles.

Check out our test automation engineer overview to learn more about what the role entails.

The benefits and the challenges of using generative AI in testing

Artificial intelligence in testing is revolutionizing the QA sector. However, as with any revolutionary technology, taking full advantage of generative AI in software testing brings with it a unique set of challenges.

This necessitates a critical review of the potential benefits and obstacles that come with the transition from manual to automation testing.

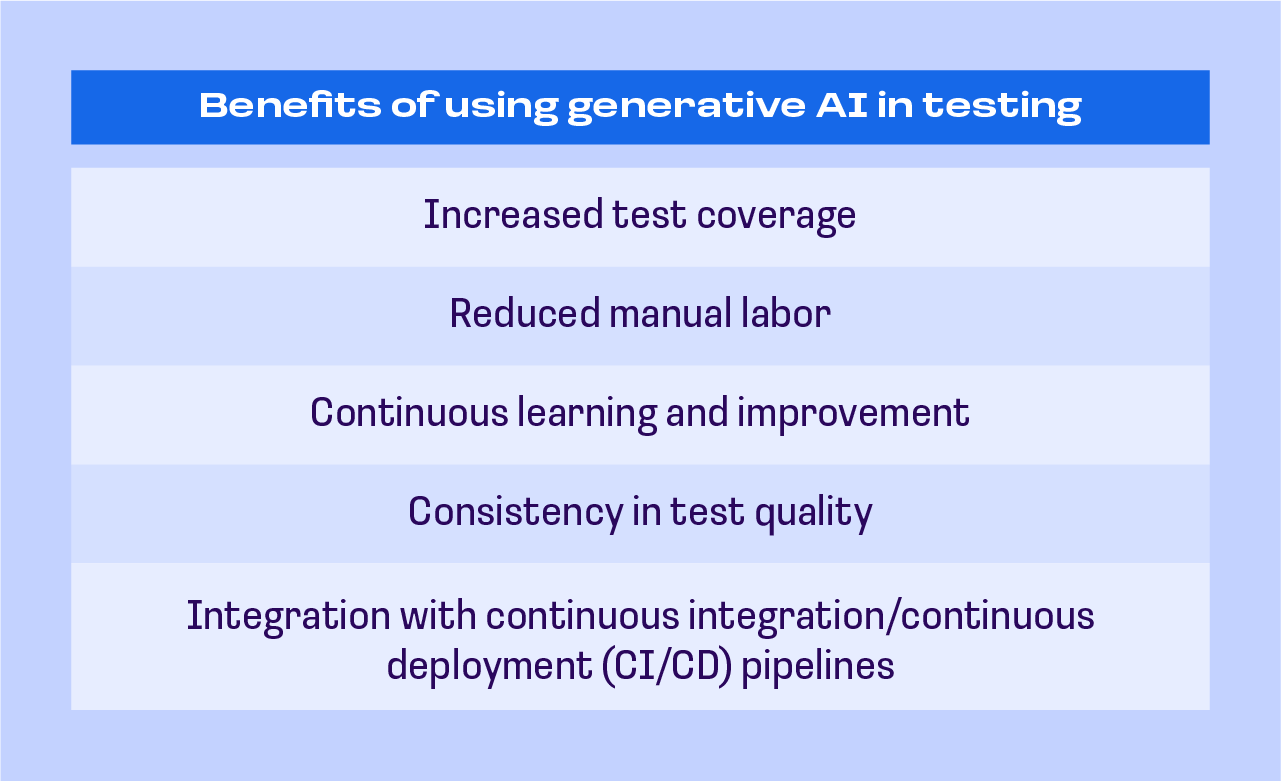

Benefits of using generative AI in testing

Increased test coverage

One significant advantage of generative AI for test automation is its ability to create a wide range of test scenarios that cover more ground than regular methods.

This technology can conduct comprehensive software scans and help unearth vulnerabilities and bugs that would go unnoticed, increasing the software’s robustness and reliability.

Reduced manual labor

Gen AI can automate test creation, thus reducing the need for continuous and repetitive manual testing. This benefit becomes useful when dealing with areas such as regression testing.

Gen AI for test automation introduces innovative ideas, saving valuable resources and time and allowing QA professionals to direct their focus to more complex tasks that need creativity and human intuition.

Continuous learning and improvement

All AI models, even generative ones, learn and improve over time. As you expose the AI to more scenarios, its ability to generate tests that accurately reflect the system’s behavior improves.

Consistency in test quality

Generative AI offers a consistency that may be difficult to achieve manually. Companies could leverage AI to maintain a high standard of test cases, reducing human errors arising from repetitive and monotonous tasks.

Integration with continuous integration/continuous deployment (CI/CD) pipelines

With regard to implementing DevOps practices, generative AI can prove to be a game changer. Its ability to swiftly create tests makes it ideal for CI/CD pipelines, thus enhancing the efficiency and speed of development and delivery processes.

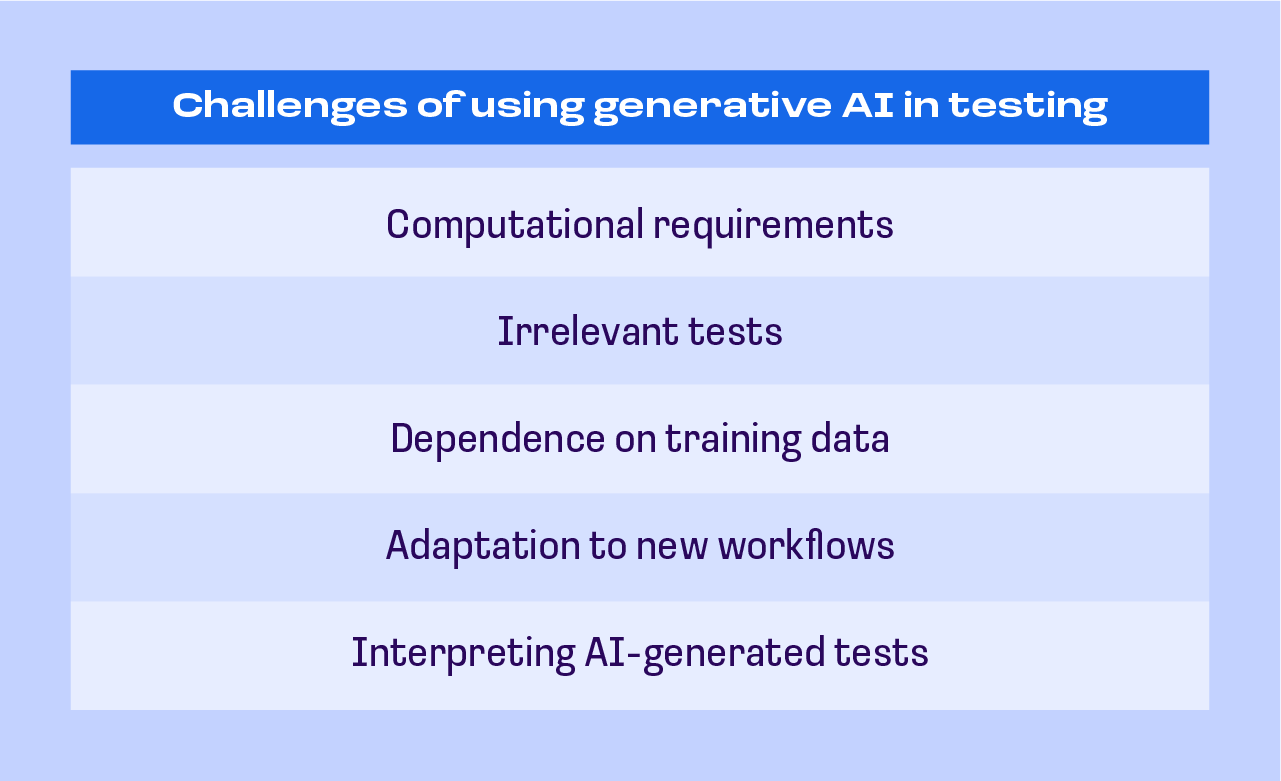

Challenges of using generative AI in testing

Charting the roadmap for QA automation testing reveals significant potential advantages, but it is also important to understand the challenges of this technology before implementation.

Computational requirements

Generative AI, especially models like large Transformers or Generative Adversarial Networks (GANs), need substantial computational resources for operation and training. This can be challenging for smaller organizations with limited resources.

Irrelevant tests

One of the main challenges of gen AI in software testing is that it may generate nonsensical or irrelevant tests, mostly because it cannot fully comprehend the context or the nuances of a complex software system.

Dependence on training data

How effective a generative AI is depends heavily on the diversity and quality of the training data. Poor or biased data can lead to inaccurate tests, emphasizing the importance of proper data collection and management.

Adaptation to new workflows

Integrating generative AI into QA requires certain changes in traditional workflows. Existing teams may need to undergo training before effectively utilizing AI tools for testing, and such changes could be met with resistance.

Interpreting AI-generated tests

While generative AI in quality assurance can create tests, comprehending and interpreting such tests, especially when they don’t work, can be challenging. This could sometimes necessitate additional skills or tools to effectively decipher the AI’s output.

To effectively navigate these potential challenges, you’ll need to employ a thoughtful approach when integrating gen AI within QA workflows, together with ongoing adaptation as technology keeps evolving.

Despite the drawbacks, the benefits of gen AI in QA are immense, indicating that in the near future, the synergy between human testers and AI will result in a software testing paradigm that is more efficient, robust, and innovative.

Learn more about the automation testing career path and what it could look like for you.

Types of generative AI models for QA automation

Part of learning how to become an automation tester is understanding the underlying technology driving generative AI. Here’s what you need to know about that:

Transformers

Transformers like GPT-4 are a form of generative AI. They are excellent at understanding context and sequence within data, which makes them perfect for tasks like generating tests based on a description or code completion.

Transformers first read and analyze the whole input before generating the output, allowing them to understand the broader context. They have mostly been used in natural language processing, but their ability to understand context could be leveraged for QA automation.

Generative adversarial networks

Generative Adversarial Networks (GANs) are a type of generative AI model that creates new data that almost resembles the input data. In the field of QA, GANs might help to create a wide variety of testing scenarios based on existing test data.

GANs have two components: a "generator" that makes new data and a "discriminator" that checks if the generated data is authentic.

This two-part setup allows GANs to create highly realistic test scenarios, even though they can be complex to train and require significant computational resources.

Check out our guide on learning with AI-assisted tools to familiarize yourself with AI applications across other aspects of development.

How to use generative AI in the QA workflow

The culmination of the life journey of a QA automation engineer is implementing what they know. GenAI in testing starts with automating the generation of test cases and data. This can be done by converting requirements into scripts and creating synthetic datasets.

Then, integrate AI for automated code reviews and bug detection, focusing on predicting areas that are at high risk. Use AI to keep test scripts updated continuously and to make regression testing more efficient.

Automate the categorization and reporting of bugs to facilitate quicker triaging. Additionally, utilizes AI for simulating user behavior and conducting load testing. Finally, AI can be applied for root cause analysis, automated test reporting, and maintaining documentation.

This approach streamlines QA processes, enhances test coverage, and speeds up bug detection, ultimately improving software quality and efficiency.

Integration with other technologies of test automation

Generative is already showing a lot of promise in the world of QA. However, its capabilities could be further enhanced by using it in combination with other advanced technologies. This has the potential to usher into the QA landscape a future with unprecedented improvements in the accuracy, efficiency, and comprehensiveness of testing.

Reinforcement learning (RL) is one such integration. AI and (RL) work well together. RL helps AI models learn to make choices by interacting with their surroundings, getting rewards for right actions and penalties for wrong ones.

This approach comes in handy for complex testing situations where it's not clear what's 'right' or 'wrong' like when testing an interactive app with many possible user paths and behaviors. An RL-based generative AI for QA model can help here.

The AI system learns from its previous testing actions and keeps on improving its testing strategy to find bugs more efficiently and effectively.

Generative AI in testing also works well with computer vision, an AI area that lets computers understand and interpret real-world visual data. This pairing is useful in QA for apps that rely on visuals, like user interface, user experience (UI/UX) testing or game testing.

Computer vision helps the AI model recognize and understand visual parts, while gen AI for testing creates new test cases based on these elements. The result is a QA system that can handle complex, image-based testing scenarios, finding bugs that would be hard to catch with regular automation tools.

Generative AI testing tools

Generative AI testing tools are revolutionizing software testing by automating the creation of test cases, identifying defects, and enhancing testing coverage.

A variety of generative AI tools for software testing have emerged that utilize AI to improve software quality while minimizing manual effort.

In addition to the needed skills to start working QA jobs, you will need to know the top generative AI software testing tools:

Testim

Testim employs machine learning to assist in the creation, execution, and maintenance of test cases. Its generative AI can automatically generate robust tests by analyzing both the code and the application's behavior.

Advantages:

- Self-healing tests that adjust to changes in the application’s UI.

- Rapid test case generation, reducing the time spent on manual testing.

- Seamless integration with CI/CD pipelines.

Disadvantages:

- Performance may decline with very complex applications.

- Requires some customization for tests involving specific business logic.

Functionize

Functionize utilizes AI to create and execute functional, end-to-end tests by understanding the intent behind test scripts. It employs Natural Language Processing (NLP) to enable users to describe test cases in plain English, which are then transformed into test steps.

Advantages:

- AI-driven testing that scales across various environments.

- NLP-based test case generation is user-friendly for non-technical users.

- Supports visual testing, which is beneficial for heavy applications.

Disadvantages:

- Initial setup can be complicated for very large projects.

- Some limitations exist in advanced customization.

Mabl

Mabl emphasizes low-code test automation powered by AI. It learns from test executions, automatically generates scripts, detects anomalies, and can even fix failing tests.

Advantages:

- Integrated AI-powered autohealing tests minimize maintenance efforts.

- Well-supported visual testing and regression testing.

- Built-in analytics provide insights into test performance and failure causes.

Disadvantages:

- The steeper learning curve for highly customized workflows.

- It may need more manual adjustments in highly dynamic applications.

Applitools

Applitools leverages AI for visual testing, enabling testers to assess the visual elements of applications on various devices, browsers, and screen sizes.

Advantages:

- Great for validating visuals across different browsers.

- AI-driven visual comparisons can identify subtle differences.

- Seamlessly integrates with popular automation frameworks like Selenium and Cypress.

Disadvantages:

- The focus is mainly on UI testing, which may not suit nonvisual assessments.

- Customization and integration need technical know-how.

Sahi Pro

Sahi Pro employs AI to automatically create test cases, particularly for web applications. It is effective for cross-browser testing and accommodates various test types, including functional and regression testing.

Advantages:

- Extensive support for numerous browsers and operating systems.

- Test creation can be automated using record and playback functionalities.

- AI capabilities lessen the maintenance burden for test cases.

Disadvantages:

- The user interface could be more intuitive.

- Compared to competitors, it has limited support for mobile applications.

Test.ai

Test.ai is one of the most popular tools for mobile testing. It can generate tests automatically by utilizing machine learning to interact with apps in a manner similar to human testers.

Advantages:

- AI-driven tests can scale across thousands of devices for mobile app testing.

- Simple to integrate into the mobile development process.

- Tests require less coding knowledge, making them accessible to non-developers.

Disadvantages:

- Primarily focused on mobile, which restricts its application for web testing.

- Advanced features might need more technical expertise for effective implementation.

Percy by BrowserStack

Percy is a visual testing tool that works with BrowserStack. It automates UI testing and employs machine learning to compare visual components across different builds.

Advantages:

- Automated visual testing across various browsers.

- Provides detailed insights into visual bugs or inconsistencies.

- Strong integration with CI/CD pipelines and BrowserStack’s cross-browser testing platform.

Disadvantages:

- Focuses only on visual testing and isn’t a full end-to-end testing tool.

- Has limited customization options for non-UI testing.

Rainforest QA

Rainforest QA employs machine learning alongside a crowd-testing strategy to facilitate the creation and execution of functional and regression tests. Users can formulate tests in straightforward English, while AI takes care of generating the corresponding test scripts.

Advantages:

- Rapid test case generation through AI.

- No coding skills are required to create and run tests.

- Crowdsourced testers can identify edge cases and uncommon bugs.

Disadvantages:

- It may necessitate extra steps for integration into complex workflows.

- Limited customization options for specific scenarios.

Key features to consider

When selecting the appropriate generative AI software testing tool, consider these features:

- AI-Powered Test Generation: The capability to automatically create test cases based on application behavior or code analysis.

- SelfHealing: AI should be able to resolve failing tests by recognizing changes in the application.

- LowCode/NoCode Capabilities: Tools that enable nontechnical users to design and execute tests using plain language or simple interfaces.

- CrossPlatform Testing: Support for testing across various devices, browsers, and operating systems.

- Integration with CI/CD: Smooth integration into continuous integration and delivery pipelines for automated testing.

Generative AI test cases with examples

To better understand generative AI in QA, let's break it down into three main generative AI use cases in software testing:

- Generating examples from descriptions

- Code Completion

- Generating Individual Tests Based on Description

Generating examples from descriptions

This use case relies on AI models that can comprehend a specification or description and then generate relevant examples. These examples can be anything from test cases to full code snippets, depending on the given context.

For example, you can use OpenAI's language model, ChatGPT, to make a test example in a specific programming language by giving it a short description.

Think about a scenario where a project manager inputs a task like 'Review onboarding process.' The AI quickly interprets the request and generates a detailed review checklist, streamlining the task and saving valuable time that would otherwise be spent creating it manually.

Code completion

Generative AI can also be used for code completion, a feature that anyone who has coded is likely familiar with. Traditional code completion tools tend to be somewhat inflexible and limited, often struggling to grasp the broader context.

Generative AI for QA testing has the potential to change this by taking into account the wider programming context and even prompts found in comments.

A prime example is GitHub’s CoPilot, which leverages AI to produce code snippets based on existing code and potential prompts. This not only speeds up the coding process but also helps in minimizing human error.

Generating individual tests based on description

Finally, generative AI for automation testing can be utilized to create comprehensive tests based on given descriptions. Rather than just providing examples, the AI understands the requirements and generates a complete test.

This process includes not only creating the necessary code but also establishing the required environment for the test.

For instance, if given a description like, “Develop a full test for a shopping cart checkout process,” the AI would evaluate the requirement, generate the appropriate code, and set up a test environment, all while reducing the need for human involvement.

Future trends and opportunities of Gen AI in software testing

Generative AI is an exciting and rapidly advancing field that has the potential to transform automated software testing.

By streamlining the creation of test cases, test automation using generative AI can enable testers to save time and effort while enhancing the quality of their tests.

Looking ahead, generative AI is expected to play a significant role in automating various software testing tasks, such as:

- Generating test cases: Gen AI for testing can create test cases specifically designed for particular software applications. This ensures that tests are thorough and cover all possible failure points.

- Exploratory testing: Generative AI can facilitate exploratory testing, a method that involves examining software in an open-ended way. This approach can help uncover unexpected and undocumented bugs.

- Visual testing: Generative AI can also automate visual testing, which assesses the visual aspects of software. This ensures that the software appears correct and adheres to all design specifications.

Beyond these specific applications, generative AI is likely to enhance the overall efficiency and effectiveness of automated software testing. For instance, generative AI can be utilized to:

- Identify and prioritize test cases: It can pinpoint test cases that are most likely to uncover bugs, allowing testing efforts to concentrate on the most critical areas.

- Automate test maintenance: Generative AI can streamline the upkeep of test cases, ensuring they remain current as software evolves.

To aid in your learning how to become a QA Automation Engineer, find your next best book for automation testing in our article about the topic and bulk up on the fundamentals to give yourself an edge.

Learn how to use generative AI automation testing in the course from EngX

Engineering Excellence (EngX) was established in 2014 to tackle the typical challenges faced by developers, teams, and projects. It offers software engineers a range of products, tools, and services designed to enhance their productivity in software development.

EngX has demonstrated its value and now leads the engineering industry by promoting high-quality engineering practices and helping teams learn how to transition from manual to automated testing regimes.

.png)